Apache Parquet is a open source, column-based file format that's great for storing and retrieving data quickly. It has smart compression and encoding methods to handle large amounts of data easily. It's perfect for both regular and interactive tasks. It is designed to be a common interchange format for both batch and interactive workloads.

Parquet works really well with Spark, a widely-used data processing tool. But it's not limited to just Spark - you can also use it with other big data tools like Hive, Pig, and MapReduce.

Advantages of Parquet

Compression algorithms reduces the size of data files, making storage & data transfer more efficient.

There are two specific goals with data compression:

Reduce data volume and save on storage space: By compressing data, you can significantly decrease the amount of space it occupies on a storage device. This can be particularly beneficial when dealing with large files or when storage space is limited.

Enhance disk I/O and data transfer across networks: Compressed data requires less time to read from or write to a disk, resulting in improved input/output (I/O) performance.

Saves on cloud storage space by using highly efficient column-wise compression, and flexible encoding schemes for columns with different data types.

Increased data throughput and performance using techniques like data skipping, whereby queries that fetch specific column values need not read the entire row of data.

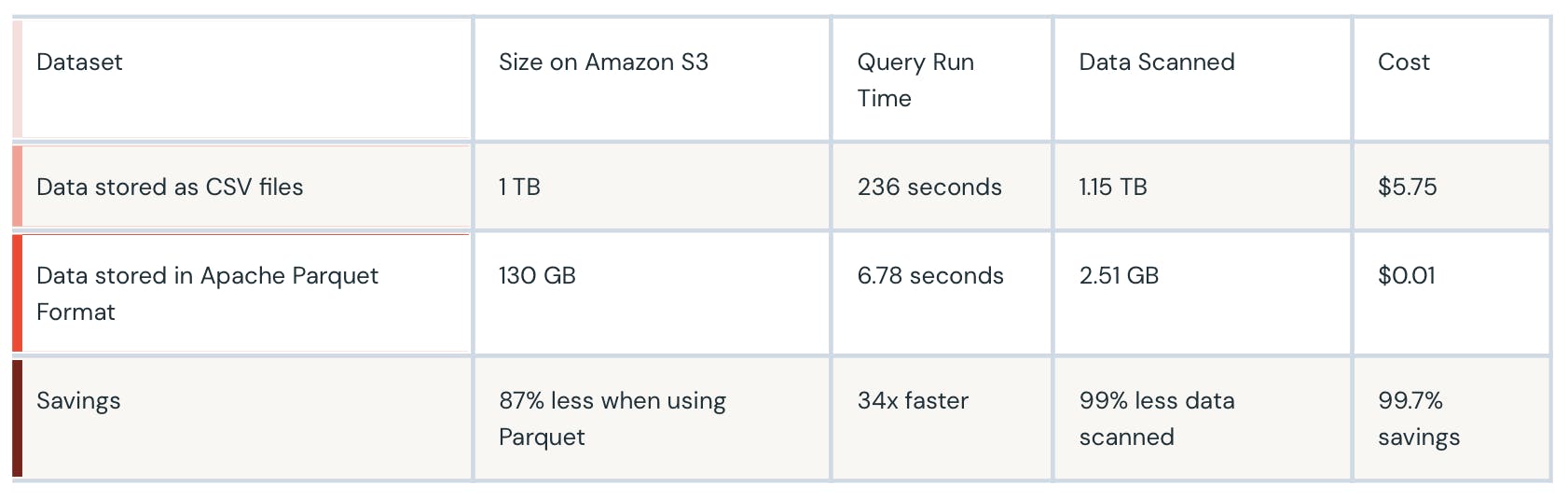

Parquet vs CSV

CSV is a simple and common format that is used by many tools however it is not efficient when it comes to scale. Also, storing it on cloud will increase the charges as it will occupy larger volumes when data grows.

On the other hand, parquet has significantly decreased storage requirements for its users by at least one-third on large datasets. Additionally, it has significantly improved scan and deserialization times, leading to reduced overall costs.

Image Source : Apache Parquet

Parquet format enhances compression, with homogeneous data leading to significant space savings. I/O is reduced by scanning only required columns, and better compression lowers input bandwidth. Column-wise data storage allows for optimized encoding, improving instruction predictability on modern processors. Parquet is ideal for write-once-read-many applications.